01. About Us

Core technologies in my stack:

- ▹ Python & Scala

- ▹ Databricks

- ▹ Apache Spark

- ▹ Snowflake

- ▹ AWS / GCP / Azure

- ▹ LLM Integration

02. Services

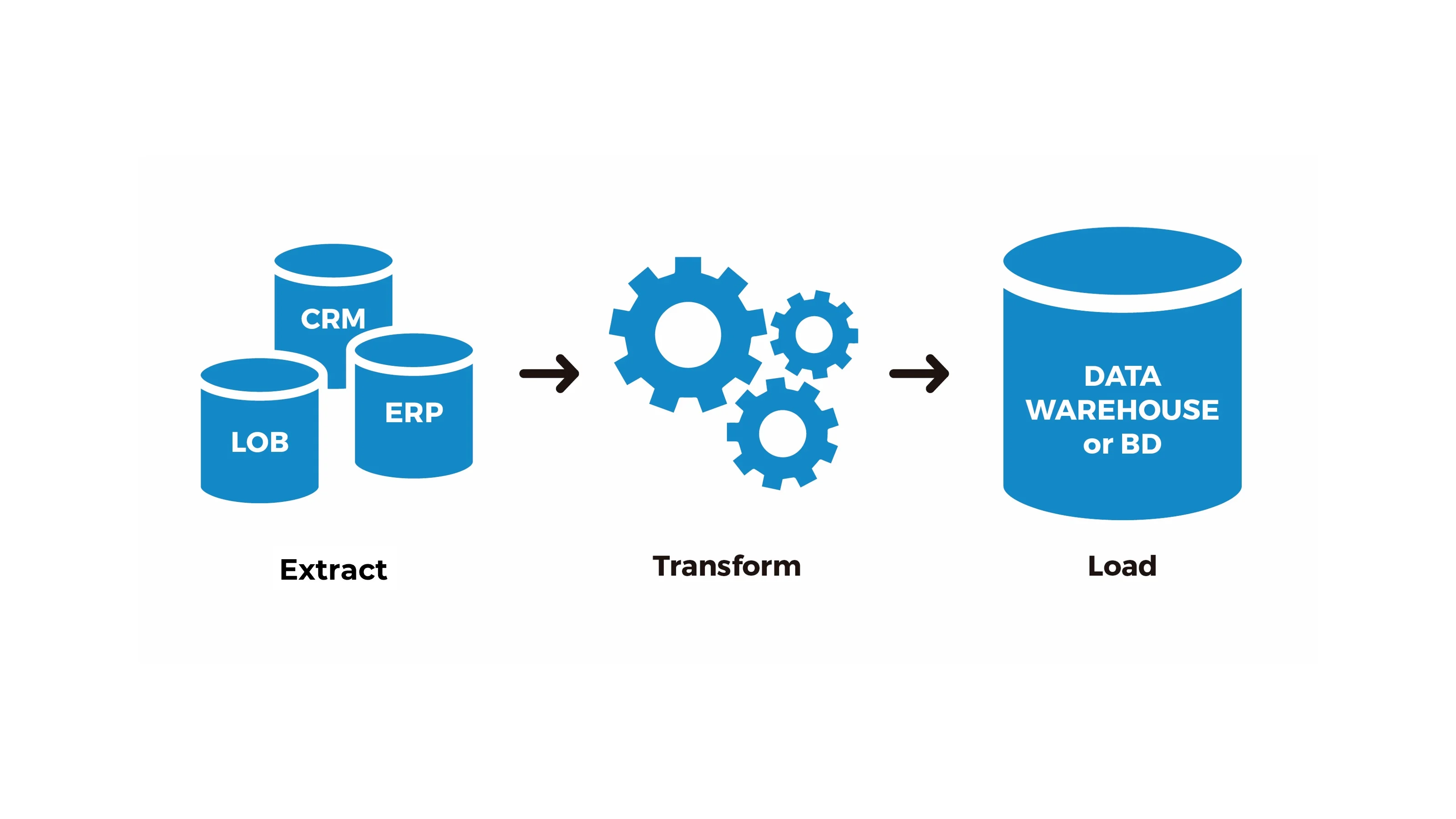

Data Engineering

Enterprise-grade data pipeline and analytics platform development. We design and implement modern data architectures that process millions of records efficiently, ensuring data quality and reliability for mission-critical business operations.

Cloud & ML Ops

Cloud-native ML operations and infrastructure solutions. We deliver end-to-end ML pipelines, model deployment automation, and monitoring systems across multi-cloud architectures, ensuring reliable and scalable AI/ML model lifecycle management.

AI & LLM Integration

Intelligent solutions powered by large language models and retrieval-augmented generation. We build production-ready AI applications leveraging semantic search, embeddings, and context-aware responses for sophisticated natural language understanding.

03. Highlighted Projects

AI-Powered Analytics

Enterprise Data Warehouse with LLM Integration

Architected and implemented enterprise-grade Snowflake data warehouse from the ground up, processing 1M+ monthly data ingests with integrated LLM-powered analytics capabilities using Claude and GPT-4 APIs.

Designed optimized star/snowflake schemas with intelligent clustering strategies and virtual warehouse configurations, achieving sub-second query performance across 5TB+ datasets while reducing compute costs by 15%.

Engineered robust ETL pipelines using Python (Pandas, NumPy), Scala (Apache Spark), and AWS Glue with seamless LLM API integration. Implemented RAG (Retrieval-Augmented Generation) architecture leveraging vector databases for semantic search, processing 20GB+ daily telemetry data with 99.8% accuracy and delivering 35% improvement in anomaly detection capabilities.

- Snowflake Python Claude API GPT-4 AWS

Real-Time Streaming Analytics

Multi-Channel Conversational Data Platform

Engineered a high-throughput data platform processing millions of real-time conversational interactions across multiple channels, enabling businesses to derive actionable insights from customer communications.

Architected multi-cloud data infrastructure (AWS, Azure, GCP) optimized for latency, resilience, and cost efficiency. Implemented serverless and event-driven data ingestion pipelines using AWS Lambda and Kubernetes for asynchronous scalability.

Designed and deployed Databricks streaming pipelines for real-time and batch processing of conversational interaction data. Built analytics-ready data models in Snowflake, enabling comprehensive reporting, monitoring, and cross-channel behavioral insights.

Established data governance framework with comprehensive cataloging, lineage tracking, and observability. Solutions delivered measurable impact across retail, fintech, hospitality, and gaming sectors.

- Databricks Snowflake AWS Lambda Kubernetes Golang

Payment Analytics Platform

High-Volume Transaction Data Infrastructure

Architected data infrastructure for a high-volume payment processing platform handling 500K+ daily transactions, enabling real-time fraud detection and business intelligence capabilities for digital commerce.

Designed event-driven data architecture using AWS services (Lambda, DynamoDB, RDS), Kafka streaming, and Kubernetes orchestration with asynchronous processing pipelines (SNS/SQS) for reliable, scalable data ingestion.

Implemented comprehensive analytics layer with Snowflake data warehousing, Athena queries on S3 data lakes, and Databricks pipelines for real-time fraud detection and batch transaction analytics.

Delivered secure, performant data solutions that enabled advanced fraud detection, improved transaction monitoring, and powered business intelligence across digital and physical payment channels.

- Snowflake Kafka AWS Kotlin Databricks

These are highlights from my recent work. For a complete overview of my experience, visit my LinkedIn profile.

04. Ask for a demo

I'm available for data engineering consulting engagements. Whether you need help architecting data infrastructure, implementing AI solutions, or have questions about your data strategy, I'd be happy to discuss how I can help.